Rapid digital transformation and the push to accelerate innovation are expanding the volume and variety of data from dynamic, increasingly complex and data-siloed environments.

Also, the shift to remote work environments in response to COVID-19 has resulted in organizations quickly embracing digital transformation to connect remote workers to the workplace.

This shift has created security challenges.

Data Security Challenges

According to a Randstad U.S. survey, 63% of respondents said the pandemic has made their companies take advantage of digital transformation sooner than they had expected. For 49% of leaders responding, digital transformation was not a strategic priority before the pandemic.

With more than 4 million U.S. employees working remotely and that number forecasted to be at 36.2 million by 2025, there’s a need to assess the long-term security of collecting and storing data.

Business and operational model changes have created a greater dependence on data for business and operations. And with that comes new challenges about how data is accessed and analyzed.

Microservices-based architectures, complex systems and data silos surpass the capabilities of traditional log monitoring solutions. These legacy solutions can only provide a partial view into the data. They don’t provide the context to understand what’s happening and why.

Full, multi-stack observability platform is critical for IT, DevOps and business operations teams to have visibility into the health of their apps and services and proactively detect and remediate threats.

With the need to consume, aggregate and analyze fragmented data in multi-cloud environments and secure remote work models, there comes complexity and the search for more cost-efficient observability models.

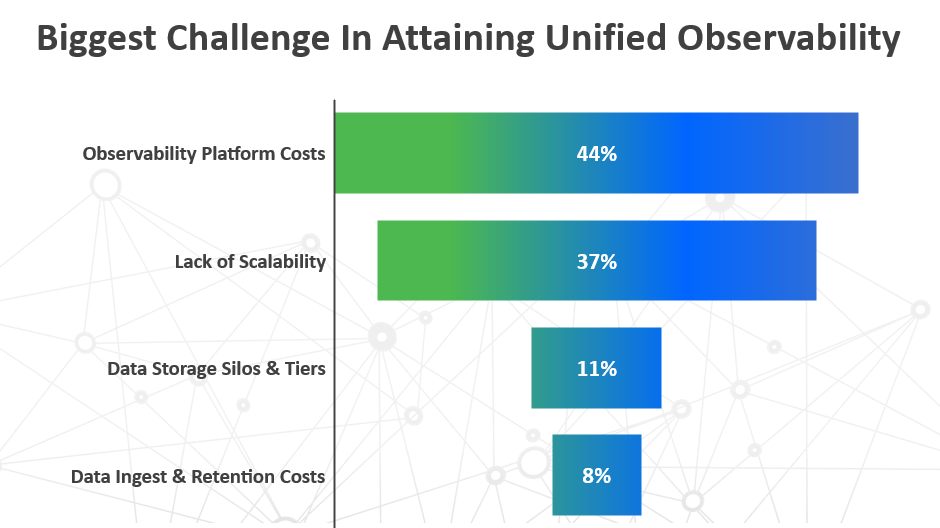

Our recent poll asking for your biggest challenge in attaining observability reveals observability platform costs were your greatest challenge. This was closely followed by the lack of scalability.

Cost Challenges

Observability platform costs are the primary concern right now. That’s because of increasing data volumes from distributed infrastructures, cloud apps and cloud platforms. These applications generate a lot of log data. And, subsequently, there’s more to monitor.

Because you have all these new cloud apps, new cloud platforms and a distributed network means it’s now more important you can observe data, observe infrastructure and figure out what’s wrong and why there’s a problem.

Needing to scale out, collect more data, and have better analytics and visualization of that data can drive up costs. Not all observability solutions are the same. Understanding how to navigate the options and how that impacts your organization is crucial.

As you navigate your options and determine what works best for your organization, we have provided three factors to consider when choosing an observability platform.

Right Platform

Technology plays a key role in responding to changing business needs. As organizations advance with their digital transformation, the platform becomes the foundation on which the ecosystem of applications, processes and technologies are developed.

Some organizations may have amassed a complex network of technologies implemented over years to support capabilities and respond to changing needs. The challenge is legacy, cloud-hosted solutions are built for low data volumes and shorter data retention. As data volumes increase, scaling up with the legacy platforms can become cost prohibitive.

Modern cloud native platforms, however, provide software standardization and improved integration, which aid in simplifying your overall architecture.

- Platforms based on a cloud infrastructure provide simplicity and enhanced functionality.

- A cloud native infrastructure simplifies the many technology layers of servers, databases, operating systems and applications, enabling orchestration innovation.

- A cloud native infrastructure is designed to embrace rapid change, large scale and stack resilience.

- Cloud native platforms offer seamless and unlimited scalability, allowing compute flexibility based on your fluctuating data capacity needs.

One of the leading cloud native platforms is the Snowflake Cloud Data Warehousing Platform. Snowflake’s platform stores and processes diverse data, both structured and semi-structured, in a centralized system. And because Snowflake is cloud based, you can also store as much data as needed.

Snowflake architecture allows near-infinite scaling of data, compute and user concurrency. Not only does the Snowflake platform remove complexities and enable fast and easy access to data, but it also reduces overhead and maintenance costs. And it only charges for the compute and storage you use.

Unlimited Data with a Centralized Data Cloud

Modern technology platforms are designed to accommodate an ever-increasing volume of data. The need to monitor, measure, analyze and act on data is crucial to not only provide valuable business intelligence but for security and compliance. IT Ops, DevOps and security teams need observability of all log data, metrics and traces with longer data retention.

Standard application performance monitoring has limitations in today’s increasingly dynamic and distributed multi-application, cloud environments. Standard application monitoring simply can’t keep up.

Observability is critical for bringing together the outputs of metrics, events, logs and traces from various applications for a real-time view of how systems are performing and where issues might be occurring. Observability helps cross-functional teams understand and answer specific questions about what’s happening in highly distributed systems.

And it answers the questions: What is slow? What is broken? Why is this happening? What needs to be done?

As machine-generated and cloud-based data grows exponentially, so does the cost to keep historical data. To alleviate this, consider an observability platform with infinite compute scale-up and scale-out capabilities and unlimited cloud storage. This is not only cost-effective but can help you scale for the future by accommodating your expanding data and IT needs.

A centralized data cloud allows your organization to capture, store, secure and retrieve your information on a single system. This eliminates complex data silos and tiered, node-based architectures, supplying added benefits of data integrity and security. All agile IT and security operators recognize the benefits of having real-time access to data. And they understand the more data they have available to them, the better they are equipped to do their job.

Data Compression

However, storing all this data can be prohibitively expensive. Different observability solutions approach storage differently, with rates from $30 per year, per terabyte to more than $1,000 per year, per terabyte.

To minimize costs, you should consider observability solutions that provide data compression. Data compression can bring down costs from 70% to 90%.

In a centralized data cloud environment, data is compressed 7x to 10x with optimized storage footprints. Customers no longer need to manage separate archives or cold storage systems. To find the balance between how much data to keep in cold storage, warm storage and eventually cold storage is challenging. On top of that, to configure an elaborate architecture consisting of diverse types of nodes and find the balance between disk storage, memory and compute often are a full-time job.

Pricing Model

The increasing cost of current search and observability solutions are forcing many organizations to compromise on data collection and retention to control costs. Common pricing models include:

Volume or ingest-based pricing model. Pricing is based on your pre-determined maximum data ingest thresholds. The capacity requirements are based on your pre-determined requirements, with the ability to scale up at an added cost. What you must consider with his model is taking a chance on over-estimating the compute you need, leaving money on the table.

Capacity-based or node-based pricing model. Pricing with this model is per server node. There’s more flexibility with this pricing model to increase the number of servers when you need more capacity. But it also requires added manual operational overhead, adding and managing more servers.

User-based subscription pricing model. Pricing with this model is by number of users. This model is limited by the number of users and applications they can access or subscribe.

Usage-based pricing model. This is a new pricing model. It’s more budget-friendly, with the ability to pay for only what you use. It provides on-demand scalability and is fully automated, with no need to plan for other resources for manual processes. You simply keep as much data as you want and pay commodity rates for what you use.

Conclusion

As organizations are moving to cloud applications, security teams and IT teams are being challenged with a lack of visibility to events and metrics. Yet, security and IT teams need full-stack observability to identify, remediate and prevent performance issues in real time.

As the demand for continuous innovation adds technology complexities and the need for a more scalable, agile and secure operations, you need a full-stack observability platform to reduce complexity and risk and provide a unified view of all data, without worrying about cost and overhead.

In summary, when considering observability platforms, the questions you need to ask are:

- Is it cloud native?

- Does it have an unlimited centralized data cloud, with optimal data modeling, and the best compression?

- What is the pricing model? Does that pricing model work for you and the way you would use it?

Need More Information?

System Soft Technologies (SSTech) and Elysium Analytics can serve as trusted partners and help your organization retain, search and analyze more data, without breaking your budget.

Elysium Analytics redefines observability solutions with a cloud-native platform built on Snowflake. It provides unlimited scalability and data compression of up to 10x. It’s also the first company to introduce usage-based pricing in the analytics industry, with access to as much compute resources as you need, without limitations.

Contact System Soft today. Learn how to achieve a no-compromise observability strategy, with access to full text search, dashboards, alerting, machine learning-based analytics and reports on all your data at a far lower cost with usage-based pricing.

[Watch on-demand webinar: Achieving Observability for Security at 80% Reduced Cost; How Innominds Gained Full Security Coverage at Less Cost and Unified Observability]

About the Author: Jens Andreassen

Jens Andreassen is the CEO at Elysium Analytics, a leading cloud-scale data warehouse running on Snowflake and a technology partner of System Soft Technologies. With more than 25 years of global sales and business development experience and more than 15 years working with leading enterprise security corporations, Jens leads Elysium Analytics, which provides full text search, operational and security dashboards, using machine learning analytics and models.